GPU passthrough experience: Harvester HCI versus Proxmox

How to passthrough a GPU to Harvester HCI virtual machine?

Table of contents

- GPU Passthrough in Harvester HCI

- How is GPU passthrough in Harvester HCI compared to Proxmox?

- Harvester HCI GPU passthrough is not bug-free and foolproof

- My GPU was in the passthrough mode but was bound to amdgpu kernel driver

- I was unable to install the Windows driver for my RX6800 GPU

- Virtual Network Computing (VNC), and graphic processing priority

- Is Intel UHD 770 of Intel 13th Gen supported under GPU passthrough?

- Side note: Harvester HCI does not support USB device passthrough as of v1.3.0

- Conclusion

Recently, Harvester Hyperconvergred Infrastrucure (Harvester HCI) has reached version 1.3.0 with a stable PCI passthrough feature, so I want to give it a try with my GPUs. With Windows VM, GPU passthrough has always been a headache to me due to the infamous code 43: "Windows has stopped this device because it has reported problems". This error code is generic, so it is not easy to troubleshoot the root cause behind it.

In the homelab scene, Proxmox has been a great free and open-source hypervisor of choice, and it became even more popular after the Broadcom-VMware acquisition licensing changes. If I search for GPU passthrough guide on Proxmox, aside from the official guide, there are hundreds of variants from people across online discussions that gear toward a specific add-in board (AIB), vBIOS, host and guest driver version modification, so on and so forth. After reading through many solutions, I noticed a pattern, in which the author simply tries out several ways and sees what hits, without being able to explain the root cause. There is nothing bad about it, and I do the same when facing something out of my knowledge domain, something that I just want a working solution. Inherently, this makes the GPU passthrough experience in Proxmox not very friendly.

In contrast, Harvester HCI by design is a locked-down appliance. With Harvester HCI, I am not supposed to tweak the host environment willy-nilly. If I want a feature, it is a matter of whether Harvester HCI supports it or not, and it is not trivial to hack the thing. In contrast, Promox has a more open design, so it is more trivial for the user to tweak the host environment, even from the web GUI. There are trade-offs between the two designs, but when it comes to GPU Passthrough, it means there is only one official solution designed by the Harvester team.

This article was originally published at PCI and GPU passthrough in Harvester HCI vs. Proxmox - hungvu.tech.

GPU Passthrough in Harvester HCI

To pass through a PCI device, a GPU in case, it is trivial in Harvester HCI (assuming all relevant features, like VT-d or IOMMU are enabled in system BIOS). Here is how to pass through a GPU in Harvester HCI.

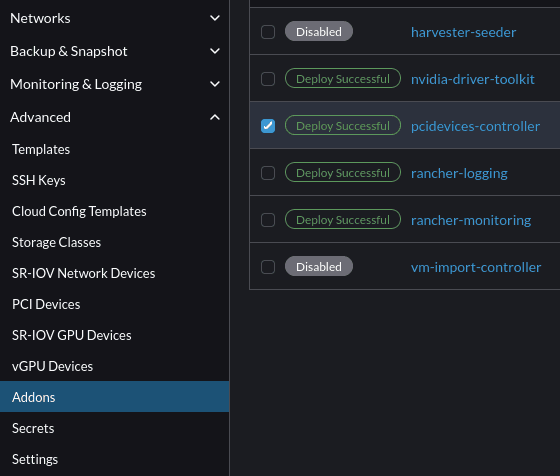

- In the left menu bar, expand Advanced, and go to Addons. Enable and deploy

pcidevices-controller.

Go to Harvester HCI's Addons, enable and deploy pcidevices-controller.

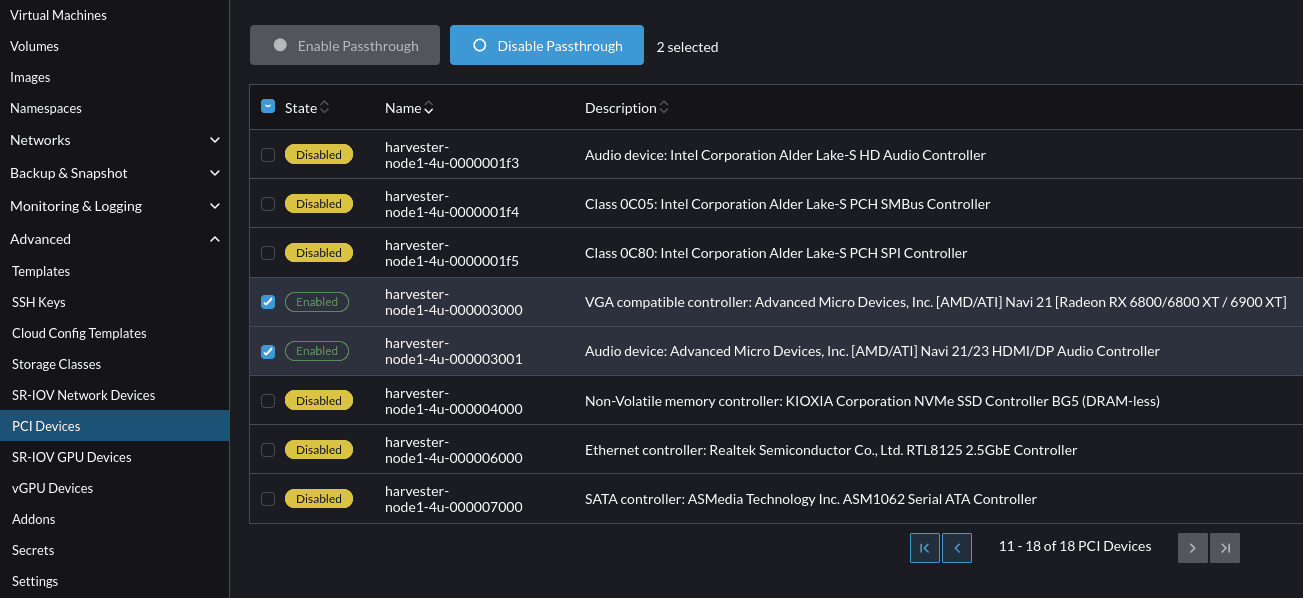

- In the left menu bar, go to PCI Devices, and enable passthrough the GPU, along with any devices belonging to the same IOMMU group.

Enable PCI passthrough for my Yeston RX6800 16GD6 GPU.

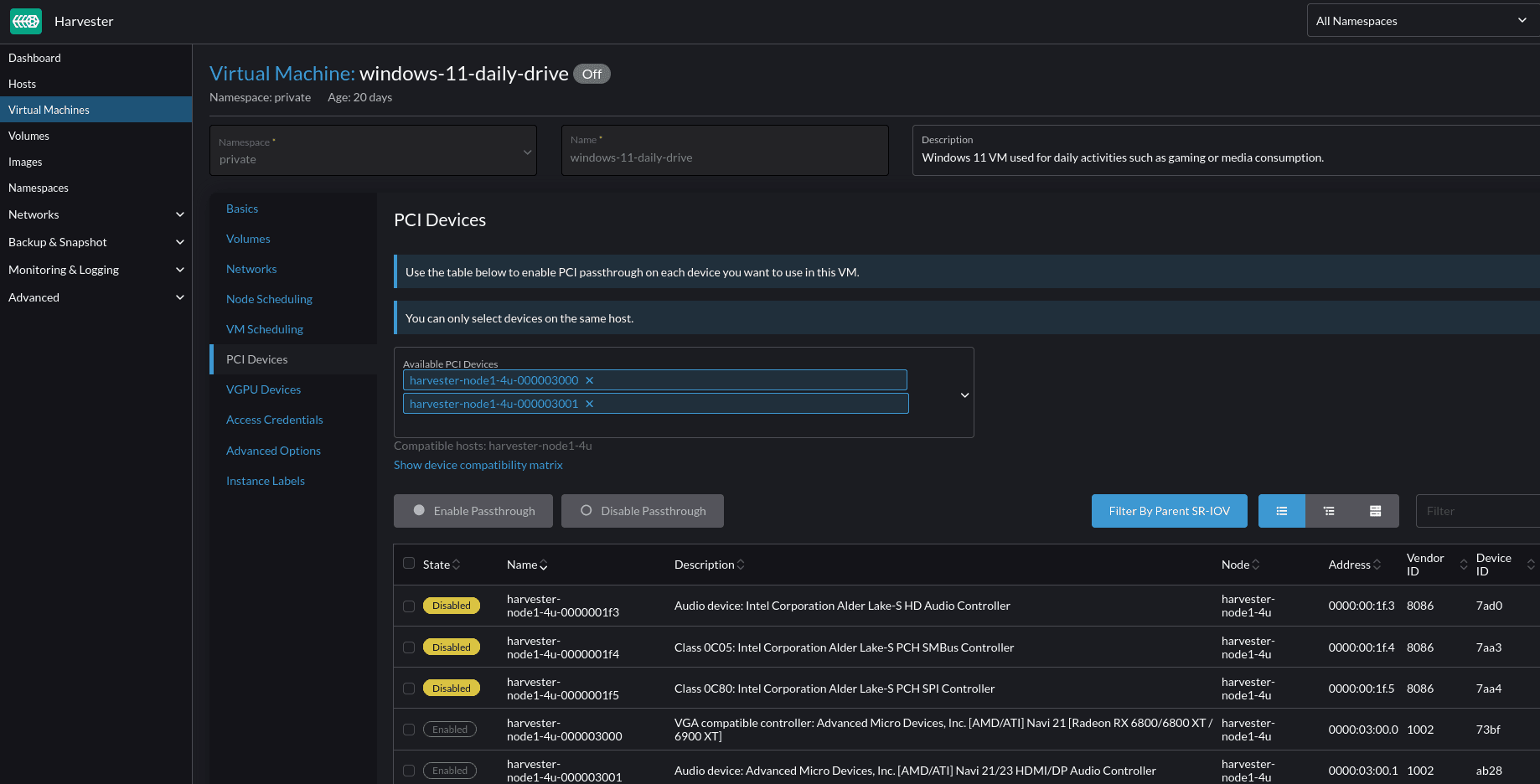

- In the left menu bar, go to Virtual Machines, and enter the Edit mode of the chosen VM. There are two ways to edit, either via a GUI or via YAML config. I normally use GUI for convenience, that said, the YAML config will come in handy in certain situations which I will discuss later. Assign available PCI devices to the VM. In Harvester HCI, enabling a PCI device passthrough and assigning a PCI device to a specific VM are two different activities.

Assign the RX6800 GPU and its audio device to my Windows 11 VM.

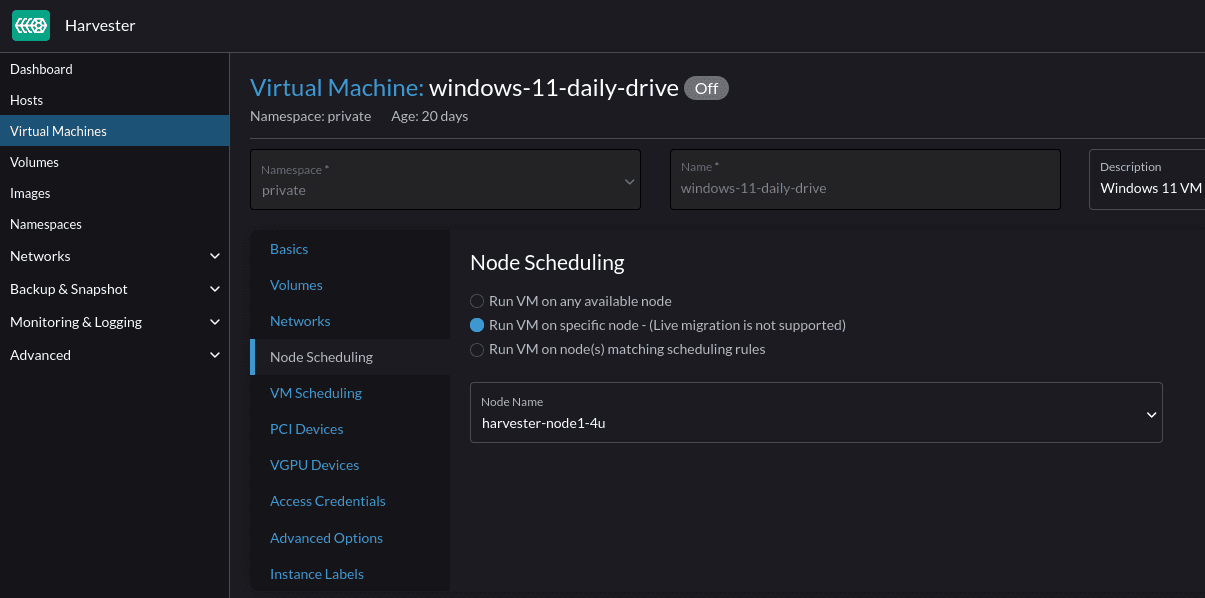

- In the left config menu bar, go to Node Scheduling, and choose Run VM on a specific node - Live migration is not supported, then choose a node that physically installs a PCI device. In a cluster of Harvester nodes, the VM must live on a node that has the PCI device installed.

Allow Windows 11 VM to only run on a specific node, and disable live migration capability.

And that is it, Harvester HCI will handle the rest of my GPU passthrough. The platform also has native support for Nvidia SR-IOV (GPU). That said, I do not own an Nvidia GPU with SR-IOV capability to test out the feature.

How is GPU passthrough in Harvester HCI compared to Proxmox?

As mentioned above, the process of GPU passthrough in Harvester HCI was straightforward and was doable from start to finish via web GUI. Meanwhile, with Proxmox, I had to tweak my host left and right. In no particular order.

I had to edit the kernel command line. Some command lines are either deprecated or only work with certain GPUs. This made me spend more or less a week to find an explanation and working solution, that is hidden in some corner of the Internet.

I had to blacklist the host machine driver to prevent the host machine from claiming the GPU.

I had to specify which kernel drivers are loaded.

I had to try out every q35 machine version, to find out which version works with which specific GPU.

I had to (in some cases), fake vendor ID, sub-vendor ID, device ID, and sub-device ID, in the hope that a certain ID would make my GPU work.

I had to figure out if certain checkboxes were required for my GPU or not.

I had to see if my GPU only works in certain PCIe slots.

And more, and more ...

The experience was much better on Harvester HCI when it came to GPU passthrough. It does not mean the steps I faced in Proxmox are not there. It means these troublesome steps are already abstracted away by Harvester's pcidevices-controller addon (here is a link to the addon GitHub repository if you want to look at their implementation).

So, is Harvester HCI the winner here? Well, sort of ...

Harvester HCI GPU passthrough is not bug-free and foolproof

Although the pcidevices-controller addon reached a stable stage and gave me a very positive user experience, I did face certain issues and behaviors. if I narrowed the solution research area to Harvester HCI-related content, I would not be able to find any useful information. Here was where the Proxmox community came into play. Between the two hypervisors, Promox is much more popular, and the content surrounding it is insurmountable. The issues and odd behaviors I faced when passing through a GPU to a Windows VM on Harvester HCI, one way or another, were answerable by digging through Promox's official guide, Reddit, and forum posts. After all, both platforms are Linux-based and utilize the same technology in some areas. For example, both use q35 as a standard machine type for VM, and q35 does not support a Resizable BAR (Smart Memory Access) at the moment. Therefore, the resizable BAR has to be disabled in BIOS, or else, code 43 happens. Anyway, here are some issues I faced.

My GPU was in the passthrough mode but was bound to amdgpu kernel driver

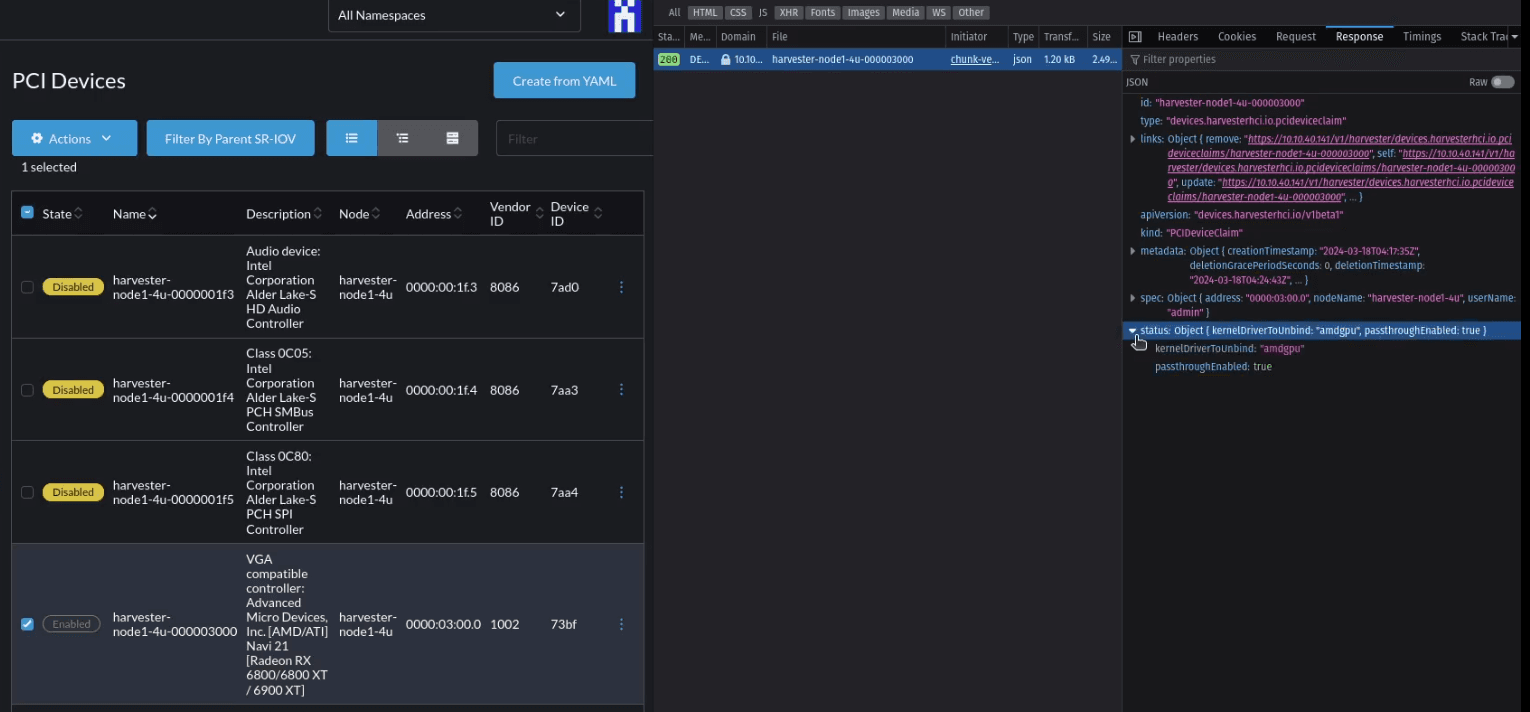

In my first attempt to pass through my Yeston Rx6800 16GD6 GPU, somehow it was stuck in a "false positive" state. What was that like?

- In the web GUI, the GPU was considered a successful passthrough and VM assignment. However, I cannot disable the passthrough from web GUI, albeit with a "success" API request. Notice the

kernelDriverToUnbindfield in network response? In the picture it wasamdgpu, but sometimes it wasvfio-pci.

Fail to disable GPU passthrough via Harvester HCI web GUI, but shown as a successful API request.

- The GPU was stuck with the

amdgpukernel driver, but the screen could not display anything as if the GPU was used byvfio-pci.

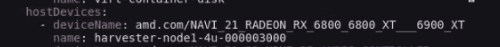

My RX6800 was bound to amdgpu the kernels driver, but its audio device utilizes vfio-pci successfully.

The passthrough was bound to a PCI address. My other GPU, which was also initialized with the same PCI address, also faced the same issue.

If the PCI address was changed (e.g., GPU was installed on a different PCIe slot, different GPU models, or devices), the issue disappeared. This had the side effect of shuffling the PCI address of multiple important devices such as the host NIC, which required me to change the

/oem/90_custom.yamlto update the persistence config during the troubleshooting process.The passthrough state is maintained. Even if I disabled and reenabled the

pcidevices-controller, or reset my host, this bug did not go away.

As you may guess, I eventually was able to resolve this weird bug by piecing the information from Proxmox communities. What was the solution?

- Removing the assignment via web GUI was not possible, so I modified the VM YAML config instead.

Remove GPU device assignment via VM YAML config.

- SSH to my Harvester HCI node with an admin account, then changed to root user and executed the following commands.

# Change from an admin user (rancher@node_ip) to root user

sudo -i

# Unbind from amdgpu kernel driver.

# Syntax: echo "pci_address" > /sys/bus/pci/drivers/<kernel_driver_in_use_name>/unbind

echo "0000:03:00.0" > /sys/bus/pci/drivers/amdgpu/unbind

# Syntax: echo "0x<gpu_vendor_id>" "0x<gpu_device_id>" > /sys/bus/pci/drivers/vfio-pci/new_id

echo "0x1002" "0x73bf" > /sys/bus/pci/drivers/vfio-pci/new_id

# After the new_id command, somehow the GPU was bound again,

# so I need to unbind one more time

# Syntax: echo "pci_address" > /sys/bus/pci/drivers/<kernel_driver_in_use_name>/unbind

echo "0000:03:00.0" > /sys/bus/pci/drivers/amdgpu/unbind

# Bind GPU to vfio-pci kernel driver.

# Syntax: echo "pci_address" > /sys/bus/pci/drivers/vfio-pci/bind

echo "0000:03:00.0" > /sys/bus/pci/drivers/vfio-pci/bind

GPU device used vfio-pci as shown from Harvester HCI API, which was similar to lspci -k.

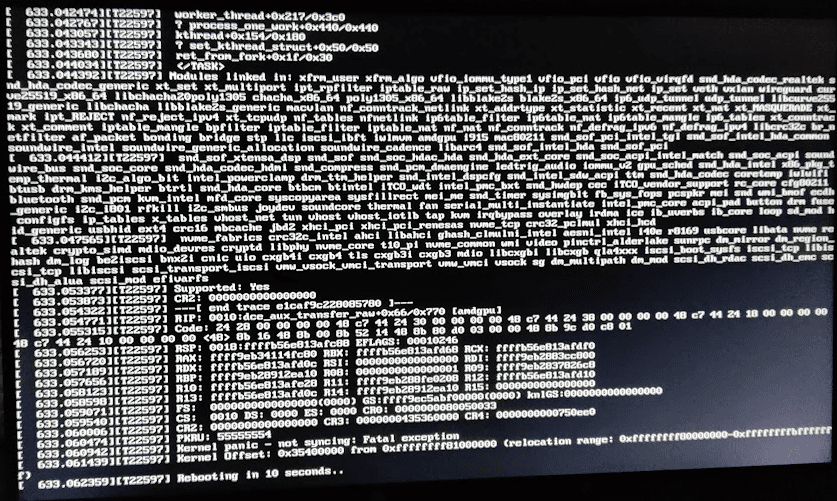

Then, I was able to disable the GPU passthrough from the web GUI and re-enable it accordingly. However, I still was not able to start the VM (unschedulable) due to the error

0/1 nodes are available: 1 insufficient amd.com/NAVI_21_RADEON_RX6800_6800XT__6900XT.preemption: 0/1 nodes are available: 1 No preemption victims found for incoming pod.Then, I restarted the node, and rerun the series of commands above. After that, my VM was able to start with the RX6800 GPU passed to it.

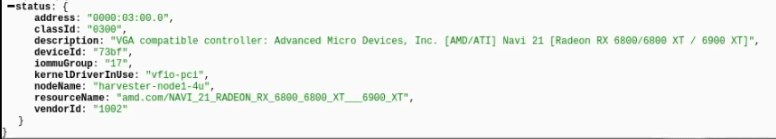

Unfortunately, the solution is not permanent. From time to time, I continue to face the same behavior, and it seems, it usually happens around the time I swap PCI devices around (changing PCI addresses). The solution itself is also not perfect and may lead to various weird issues like:

Kernel panic, fatal exception, and system crash.

Infinite loop of

amdgpu: trn=2 ACK should not assert! wait again!.An error message displays on the node's display output

[C14] Disabling IRQ #16.

An example of kernel panic, which happens when unbinding amdgu or binding vfio-pci kernel drivers.

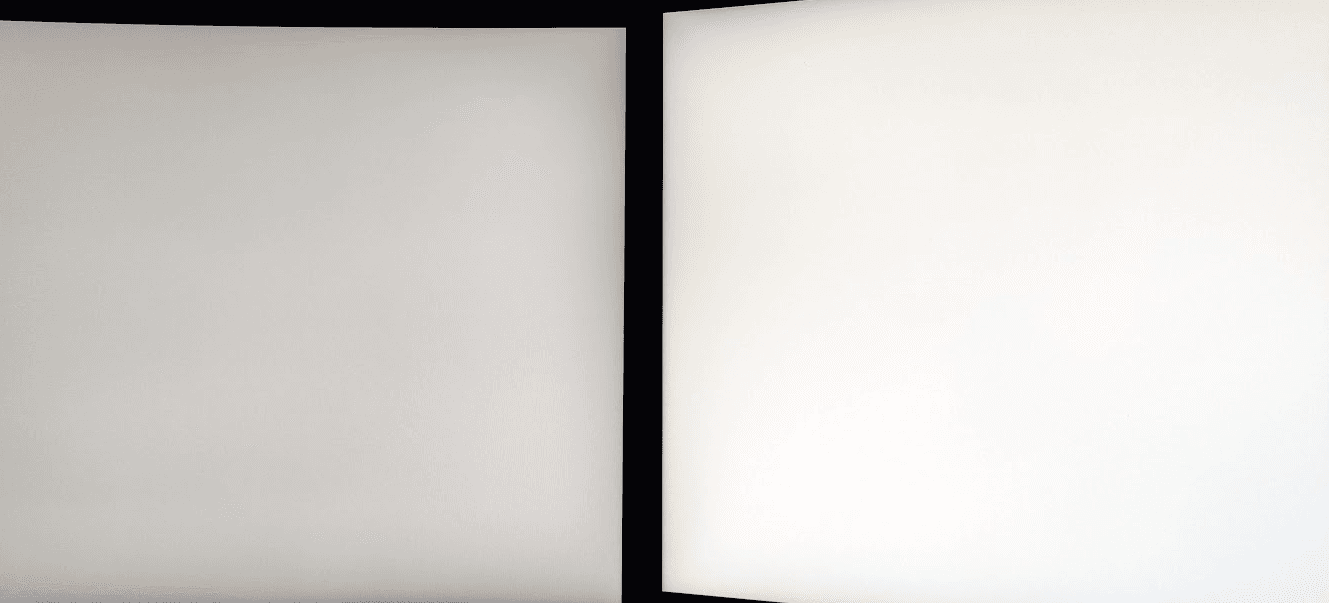

This might be an anecdotal evidence, but I noticed when the unbinding of amdgpu resulted in a "white screen of death", it meant the process was going as expected, and vfio-pci will be bound properly afterward.

Unbind amdpu resulted in a white screen of death, signaling a successful attempt.

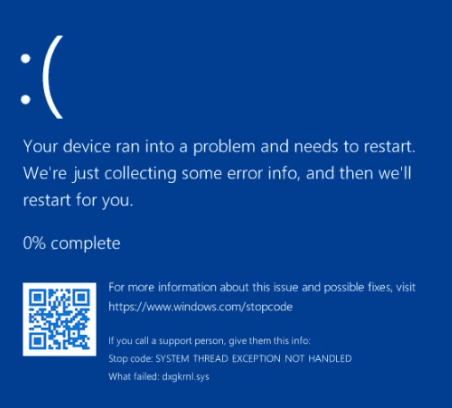

I was unable to install the Windows driver for my RX6800 GPU

When installing the AMD GPU driver on Windows, I always use the AMD Adrenalin auto-installer for convenience. At that point, my RX6800 GPU was properly recognized and displayed the image on my screen, however, a driver installation attempt will result in a BSOD dxgkrnl.sys fatal error. Even the use of the Display Driver Uninstaller utility had the same issue. I guess that anything that triggers areas related to the GPU driver, would cause the BSOD. With the verbiage logging system on Windows, I was not able to figure out the root cause. Even after Windows VM reinstallation, the BSOD issue remains. So, I decided to try another approach.

SYSTEM_THREAD_EXCEPTION_NOT_HANDLED at dxgkrnl.sys when installing AMD GPU driver on Windows 11 VM.

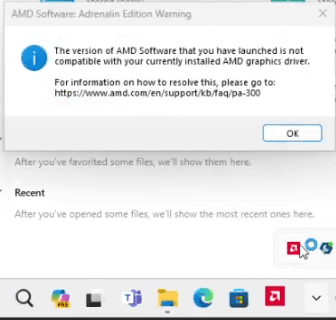

Talking about modern drivers, most likely they can handle multiple different devices in the same family, or generation. Luckily, I have a spare RX6400 GPU, so I decided to try that with my Windows VM. As expected, the GPU was properly recognized, and the driver installation, along with uninstallation, went through smoothly. After that, I powered off my Harvester HCI host to replace the GPU, and voila, no more BSOD. There was a driver compatibility issue as reported by AMD Adrenalin, but after a few restarts, AMD Adrenalin finally picked up my RX6800.

AMD Software: Adrenalin Edition cannot start due to driver compatibility issue.

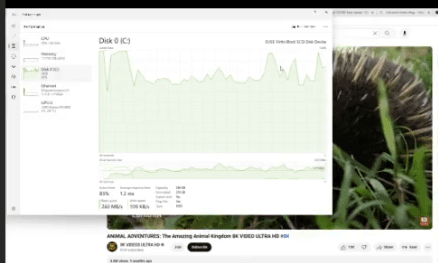

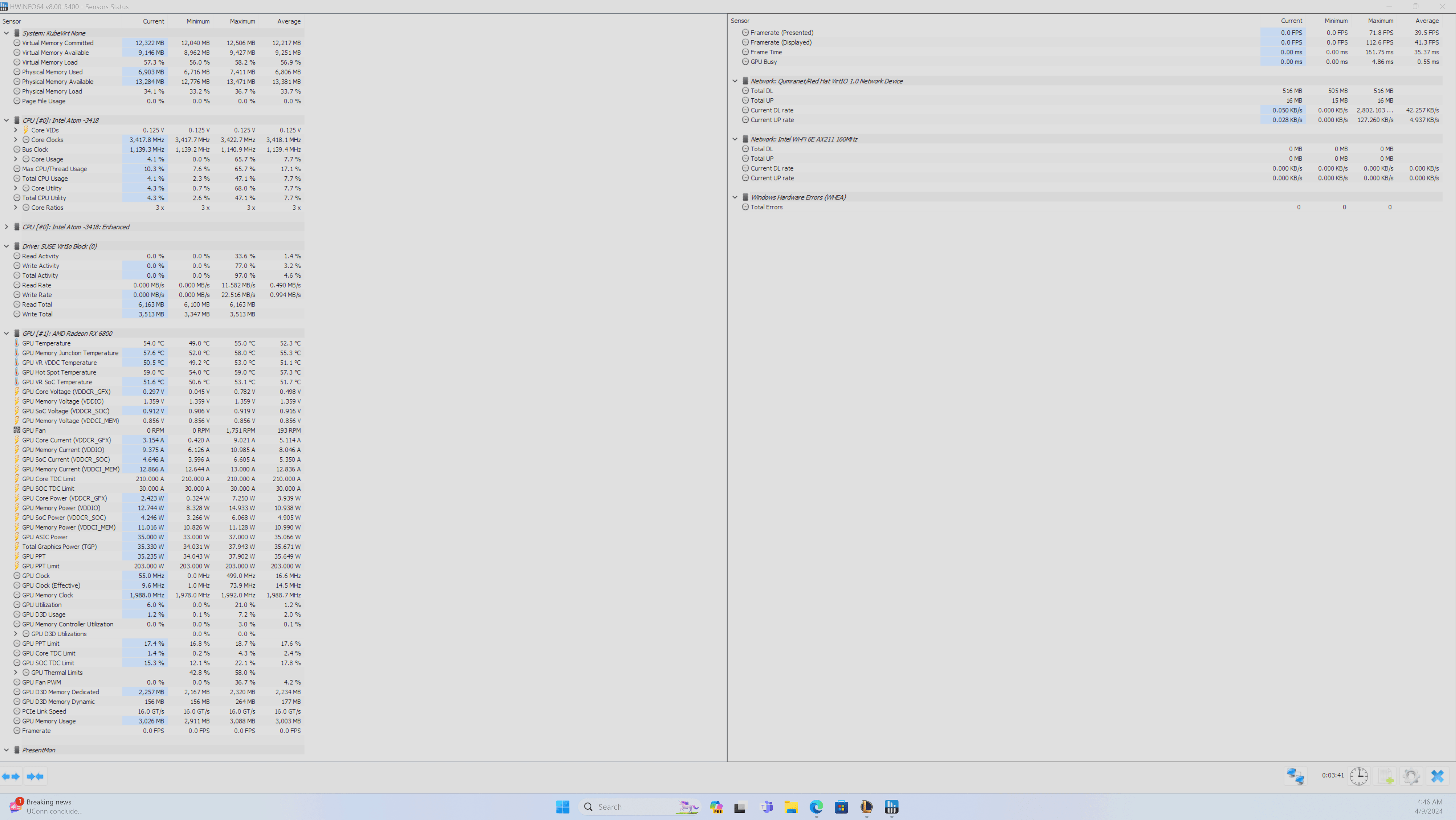

Virtual Network Computing (VNC), and graphic processing priority

In Proxmox, I have never been able to use both the VNC and GPU passthrough functionality for remote gaming. If a GPU is passed through, Proxmox VNC has no display, even with different options like VMware compatible display. However, the VNC in Harvester HCI is treated as a dedicated display, with a fixed resolution of 800 x 600. That was great, but the VNC display used a Microsoft Basic Display Adapter. As a software-based graphic processing solution, the performance is decent for basic desktop usage, but subpar in any graphics-related task such as YouTube video decoding, or gaming (1 FPS, no joke). The custom graphics option in Windows settings was also useless.

To make the AMD driver operate, I have to disable the Microsoft Basic Display Adapter in Device Manager. Oddly though, the VNC display still worked, and I think it utilized the AMD driver instead.

Not the best picture, but this shows the GPU decoder was not utilized when playing 4K and 8K YouTube vp9 encoded video (based on the GPU graph at the bottom of Task Manager).

Is Intel UHD 770 of Intel 13th Gen supported under GPU passthrough?

Unlike my dedicated GPU, I was not able to pass through the integrated GPU Intel UHD Graphics 770. From my research, I found mixed answers. Some said Windows driver has not supported Intel 12th gen, 13th gen, and 14th gen GPU passthrough yet, however, I also found anecdotal evidence that people successfully passthrough the latest UHD Graphics GPU to Windows VM. I can say, that the GPU passthrough works fine in Linux-based VMs, so I think this is a Windows driver issue only.

This is also an example of an issue, that I had to search in the Proxmox community because Harvester HCI queries did not return useful information.

Side note: Harvester HCI does not support USB device passthrough as of v1.3.0

Considering my Windows VM is for entertainment purposes, I need it to have Human Interface Devices (HIDs) such as a keyboard, a mouse, a speaker, etc. There are two ways to satisfy this requirement, either passing through my USB controller to the Windows VM or passing through a specific USB device to my Windows VM.

In my case, I mostly daily drive Bluetooth devices, so I only need to have Bluetooth enabled in Windows. My AX211 CNVi adapter has two functionalities, one for WIFI, which uses a PCIe connection, and one for Bluetooth, which uses a USB connection. This means I did not have to pass through the whole wireless adapter, because, from Linux's perspective, there are two devices on two connections. My node's motherboard, even though it has multiple physical USB controllers, only one is seen by Linux.

# Only a single USB controller shows up

00:14.0 USB controller: Intel Corporation Alder Lake-S PCH USB 3.2 Gen 2x2 XHCI Controller (rev 11)

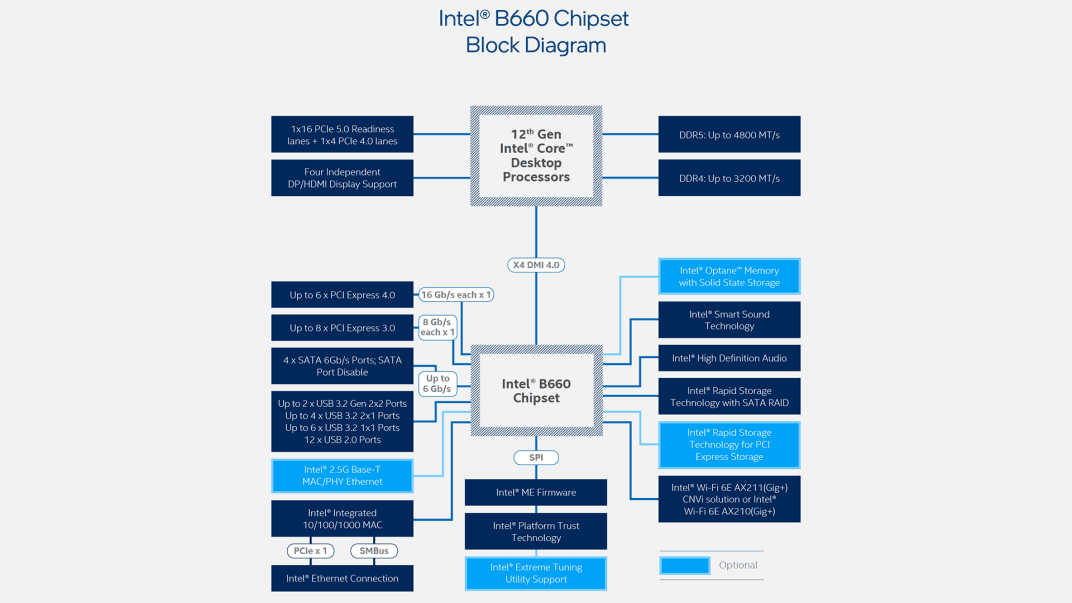

I think this is because, on the Intel 13th-generation platform, all USB controllers on the motherboard are connected back to the motherboard chipset, which is connected to the CPU via a PCIe connection. This means if I pass through my USB controller, all USB ports are disabled on the host.

This diagram illustrates the USB connections on Intel B660 chipset, which supports 12th, 13th, and 14th generation Intel Core processors (Source: Intel Product Brief).

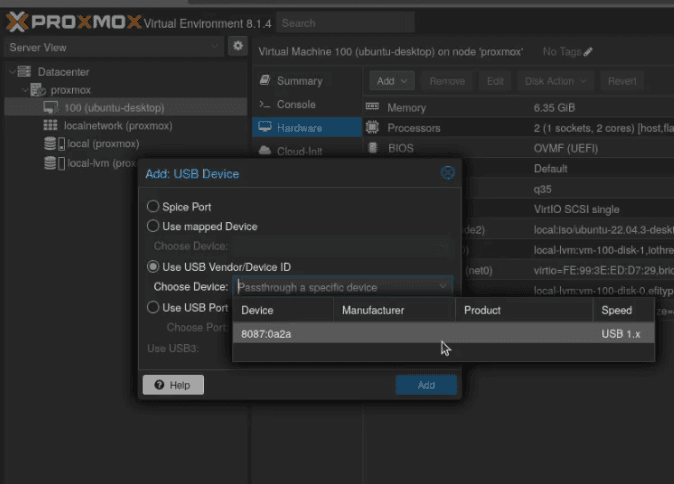

In Proxmox, there is a functionality to pass through a single USB device, and I can simply choose just my Bluetooth PHY. All is good, but in Harvester HCI, this functionality is not available yet. There is issue #1710 on Harvester HCI's GitHub, which has been open since 2021 on this feature request. At last, the Harvester HCI team decided to make this feature request into the version 1.4.0 milestone. Until then, I have to pass through my whole USB controller to have the Bluetooth functionality in my Windows VM.

Proxmox supports USB device-specific passthrough, which detects my Bluetooth PHY.

Conclusion

The user experience on Harvester HCI has been improved version by version. Feature-wise, I do not think it is on parity with Proxmox yet, but that is hard to say because both target different audiences and goals, so they might not be comparable at all. From my perspective though, the PCI passthrough functionality, especially for GPU, is a much better experience on Harvester HCI. I call it a win, but your experience might be different. In any case, if you have not tried Harvester HCI yet, give it a try, and have fun homelabbing.

Time to drive my AMD Yeston RX6800 16GD6 in a Windows 11 VM!!!

If you find this article to be helpful, I have some more for you!

Harvester HCI as a hypervisor, the new choice for homelab experiments.

A new way to search for OpenWRT-supported devices (modernize table of hardware).

And, let's connect!